No, Your Mind Can’t Be Uploaded to a Computer

Sorry, folks, but digital immortality by upload is impossible …

Like the song says, “everyone wants to be on a postage stamp, but nobody wants to die.”

And lately, one of the hottest topics for those who’d like to keep the party going forever is mind uploading, the notion that we can transfer our consciousness to machines. But like all immortality schemes that have come before it, there are fatal flaws in the plan.

The first thing we need to recognize is that consciousness — the only type of “mind” that could reasonably be uploaded in order to bestow an immortality worthy of the name — is a bodily function. It’s something our bodies do. We don’t yet know how it’s done, but one way or another, our brains manage to generate what neuroscientists call percepts (and philosophers call qualia) which are the building blocks of sentience, of conscious experience, such as sounds, colors, odors, flavors, the agony of pain, the awareness of our bodies, and everything else that that our brains fuse together to create our conscious world, whether in our waking day, in our dreams, or in hallucinations.

So we need to ask ourselves what it would mean to transfer a bodily function to a machine. How would it be possible to gather information about, for instance, your breathing, or your digestion, or your circulation and thereby “transfer” that actual bodily function to a mechanical device, so that it is somehow still “your” breathing, digestion, or circulation? The answer is that it’s not. It doesn’t even make sense. Even if you built an atom-for-atom replication of these physical systems and somehow got them to operate inside a machine, they still wouldn’t be “yours”, especially since the time lag involved in creating them would mean that they would no longer be identical to your own systems and functions by the time they could start operating.

The Computer Illusion

Of course, the argument is made that the conscious mind is different from all those other bodily functions. Specifically, that the brain is a kind of organic computer, that consciousness is a program running on it, and one’s self-identity is merely stored memory accessed by the program. So if you could duplicate the program that’s running on your brain’s hardware, copy the data in your memory to a machine computer and run the “you” program on it, voilà, your mind would be “transferred” to that computer. And if that computer were in the body of a humanoid robot, you could live indefinitely as a Westworld-style android.

There is some merit to this view at first blush. It’s true that our sense of continuous identity arises from our memory. If suddenly you were to lose all of your existing memories as well as your ability to form new ones, you would lose your sense of being who you are, even if your brain had no problem creating percepts in real time. When you woke up in the morning, you would still see, smell, and hear, and sense your own body, but you would effectively be no one, as though you had been born fully grown that very minute.

But is your brain really a computer running a program? Well, that depends on what you mean by “computer” and “program”.

Computing devices are ancient. The most well-known is the abacus, a tray or box containing small objects that can be moved rapidly from side to side on a dowel or in a channel to help a person, such as a shopkeeper, perform addition and subtraction and such. However, these devices were not programmable. In other words, they could not perform the calculations on their own, but had to be manipulated by a human being who knew how the calculations should be done.

The first programmable machines were not for computing (i.e., making calculations). They performed physical operations such as playing music or weaving fabric. In the early 1800s, a device known as the Analytical Engine combined the two concepts into a machine capable of performing certain mathematical calculations automatically. About a century later, Alan Turing devised a theory for a machine which could perform any calculation that could potentially be performed by a “human computer,” meaning a person making a mathematical calculation on paper with unlimited time to do so. This “universal” machine is the basis for all modern computers.

Both programmable and non-programmable calculating devices share two aspects of their outputs, physical and symbolic. The physical end-state of the device represents a concept which can be read and understood by a human user. In other words, the positions of the beads or stones of an abacus would be meaningless to the cat in Hokusai’s painting, as well as to any person who doesn’t know what the thing is used for. By the same token, patterns on a screen or a printed page are also meaningless to any observer who isn’t versed in that symbol system. By itself, the computer isn’t actually calculating anything; it’s simply performing pointless motions. It is only as part of a system involving human brains — which construct the device and interpret its output — that the machine can be said to be “calculating” by making the human observer think of the meaning of the symbolic result. If all you know are Roman numerals, the output “4,263” is gibberish.

But while the computer itself does not, for example, do any real-world addition or subtraction of things — that is, the abacus or the computerized cash register doesn’t add any coins to the till or remove them — it is possible to combine computing devices with other sorts of machines to do physical work driven by “computations”, which are simply automatic changes of states according to a set of rules (the program). These sorts of computer-machine combinations are how we get computer-driven robots to paint cars, for example. And this sort of work is indeed essentially similar to many human bodily functions, such as motor functions like moving our arms and legs, mediated by the brain’s motor cortex.

In these situations, we’re dealing with a different type of “computation” which is not concerned with performing mathematical calculations (although all of these operations can be described mathematically), but rather with performing physical iterations, or repetitions of various kinds of changes of state. In A New Kind of Science, published in 2002, Stephen Wolfram introduced the idea that the entire universe is in fact a computer of this sort. Every change of state of everything that exists is quite literally a computation, an initial state following a rule to reach an end state, an input and a programmed output. And the program is the set of physical laws.

What’s more, the program has no independent existence outside of the physical “machinery” itself, but rather is an abstraction that we derive by observation. For example, when Newton made his calculations about the strength of gravity — that its attractive force could be derived by multiplying the masses of the objects involved, and that it weakens in proportion to the square of the distances between the objects, so that tripling the distance will reduce the force to 1/9 of what it had been and so on — he had no clue why his numbers were what they were. It took Einstein to model gravity as the warping of spacetime before we understood how those values could arise from the physics of the universe. The “rules” of the program are simply the properties of the “hardware” itself.

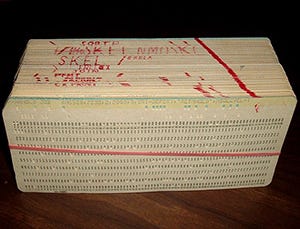

And the same is true of our computers, like the one I’m writing this article on right now and the one you are, probably, using to read it. It was true of the punch-cards that ran the first programmable looms, as well as those that ran IBM machines when I was first introduced to computers as a kid.

Two centuries ago, it was easy to see that the Analytical Engine was just as much a physical device as was an automated loom or piano. Nobody would think that any “software” independent of the hardware was involved. But today, symbolic programming languages and graphical programming interfaces make it easy to fall into the illusion that software is not hardware. In fact, the laws of physics are the only program around, and they are the same thing as the hardware. All we are doing when we program computers is to tweak their physical states so that when we run power through them they do what we want and reach some sort of useful end state, whether that’s a pattern of lights on a screen or a pattern of impulses in wires that move a spraypaint gun on the end of a robotic arm, or any other outcome.

It is easy to believe that computers, or “information processors”, are literally processing some kind of stuff called information in the way that a food processing plant processes corn, and that that this information exists independently within the machine. And it is easy to view our brains in the same way, as information processors which literally contain information-stuff. But like the laws of physics, or large-scale properties of matter such as temperature and density and pressure, information is also an abstraction. It’s a darn useful one, to be sure, but an abstraction nonetheless. We live in a world of space, time, energy, and matter. Information does not exist as its own sort of thing, independent of these.

It is an error of some popular interpretations of science to treat information as stuff, and to think of the “contents” of our minds, whatever that may mean, as information. And this error makes it easy to imagine that such “content” could be “transferred” to a general purpose computer, from one “information processor” to another. But it doesn’t work like that.

Consciousness is not information. It is a biological activity. To get a machine to perform that same activity, it will have to physically do what the brain is doing, or something equivalent. General purpose computers are not built to do that. And no amount of programming can get them to. We understand the basic mechanics of moving our arms, so we can build computer-machine combos to mimic that activity. But since we don’t know how our brains generate conscious percepts, we cannot build a machine to have conscious experiences, with or without a computer in it. (This is also why the internet will never become sentient — it’s not built to be.)

The Turing Myth

There is another prevalent error within the computing world regarding the brain and the universal computer theorized by Turing which leads to the mistaken idea that Turing proved that a general purpose computer can do anything that a human brain can do. And this leads some in the computing field to assume that general purpose computers can be conscious if properly programmed, and therefore that mind uploading must be possible, even if we haven’t yet figured out how to make it happen.

Here’s how the Stanford Encyclopedia of Philosophy (where you can read about the issue in detail) sums up the problem:

Unfortunately a myth has arisen concerning Turing’s paper of 1936, namely that he there gave a treatment of the limits of mechanism, and established a fundamental result to the effect that the universal Turing machine can simulate the behaviour of any machine. This myth has passed into the philosophy of mind, theoretical psychology, cognitive science, computer science, Artificial Intelligence, Artificial Life, and elsewhere — generally to pernicious effect.

The thing is, consciousness is not a calculated end-state, but a purely physically computed one, in Wolfram’s sense of the term. In order to produce actual percepts in the real world, a computing device would need to be combined with some other sort of machinery to produce actual extant percepts in our spacetime. Just as a computing device by itself cannot reproduce human breathing, digestion, or circulation — but only symbolic representations of these processes — no computing device by itself can reproduce human conscious awareness. This is not a problem of technological advancement. It simply can’t be done.

A lot of progress has been made in our understanding of what we might call the daisy-chain processes of the brain. Our skin contacts a hot surface, nerve cells react biochemically, triggering a cascade of electrochemical impulses along a series of nerves to our spinal column, up to the brain stem, then through our motor cortex, and then out to our muscles, which contract and withdraw our skin from the hot surface. That is, of course, a grossly oversimplified description of what’s actually going on, but it is the kind of thing that nervous systems originally evolved to do.

Somewhere along the line, the brains of complex animals developed a qualitatively different ability, an entirely new trick — the production of percepts. No amount of daisy-chain activity can do that. At some point, our brains evolved the “other machinery” necessary to create colors and sounds and smells and such. And we are still trying to understand what and where that machinery is. But examining working brains in living animals is extremely hard to do, and typically requires indirect and incomplete means.

One of the more promising recent advances in our theories for the machinery of consciousness is CEMI, conscious electromagnetic information field theory, which proposes that evolution used the “junk” electromagnetic fields produced by neural activity to perform the new trick. Investigations into CEMI are still in the embryonic stages, and it may not pan out, but at least it has the potential to possibly lead us to an answer. Continuing to follow the daisy chain does not.

Making Replicants

Another notion that has been proposed for eternalizing the mind is to find a way to construct components that mimic the functions of neurons, and cobble them together in the right way. While the challenges to any such effort are enormous, to say the least, it becomes easier to imagine if we think of the conscious mind as “information” and neurons as simple switches in the daisy chain. Looking at it like that, we can ignore the brain’s actual physical structure, and imagine that the components of a conscious machine could be laid out on a table-top, that their arrangement is irrelevant, and that issues like processing speed can be ignored.

But as usual, such oversimplifications are too good to be true. And if anything like CEMI turns out to be accurate, they are right out the window. The position that the actual physical structure of the brain is irrelevant to consciousness as long as the flow of information can be preserved is called “opportunistic minimalism”, a type of “spherical cows” argument, after an old joke about theoretical physicists. Here is how Ned Block described the state of the conflict between biological and minimalist views in 2009, noting that consciousness and “thought” or intelligence are not the same thing:

We do not know how to make a machine that can think, but whatever difficulties are involved in making a machine think, they are not difficulties about consciousness…. The biological theory says that only machines that have the right biology can have consciousness, and in that sense the biological account is less friendly to machine consciousness….

As should be apparent, the competitors to the biological account are profoundly nonbiological, having more of their inspiration in the computer model of the mind of the 1960s and 1970s than in the age of the neuroscience of consciousness of the 21st century. As [Daniel] Dennett confesses, “The recent history of neuroscience can be seen as a series of triumphs for the lovers of detail. Yes, the specific geometry of the connectivity matters; yes, the location of the specific neuromodulators and their effects matter; yes, the architecture matters; yes, the fine temporal rhythms of the spiking patterns matter, and so on. Many of the fond hopes of the opportunistic minimalists have been dashed: they had hoped they could leave out various things, and they have learned that no, if you leave out x, or y, or z, you can’t explain how the mind works.” Although Dennett resists the obvious conclusion, it is hard to avoid the impression that the biology of the brain is what matters to consciousness — at least the kind we have — and that observation favors the biological account. (The Cognitive Neurosciences, fourth edition)

And there is yet another hurdle in the path of attempts at mechanical replication — the body map. Each of our brains contains a neurological map of the body it’s in. Without a matching body map, the brain cannot work effectively. So even if the physical functioning of your brain could be replicated in a machine brain, it wouldn’t work properly in its android shell. And we know from actual instances of body-map errors in the human brain that the conscious psychological experience would be a living hell. At every waking moment, your mind would be setting of alarm signals and screaming at you that things were terribly, horribly wrong. So in addition to figuring out how to somehow craft a synthetic brain that mimics the detailed small-scale biological functions of your organic brain, the synthetic brain would have to be altered so as to map to the new body mechanism in which it is placed.

When you consider that the brain has as many neurons as our galaxy has stars, more than 100 billion, and that the number of connections between them outnumbers the stars in the entire universe, the goal of brain replication would appear to be a castle in the sky, even if we could somehow create workable synthetic neurons, which itself is highly doubtful.

This is not to say that humans will never unlock the secrets to immortality of the mind. I’m not waiting up nights, myself, but who knows? What we do know, however, is that mind uploading will not work, because it cannot work.

Read every story from Paul Thomas Zenki (and thousands of other writers on Medium).

Paul Thomas Zenki is an essayist, ghostwriter, copywriter, marketer, songwriter, and consultant living in Athens, GA.